Background

I have had a lab setup at home with 2 i servers going back to a Linux Ubuntu ZFS server for some time (Server Rack). The Linux server is essentially acting as a homemade SAN. The ZFS server was presenting a ZVOL via SCST over 56Gb SRP (InfiniBand) to the ESXi hosts. The ZVOL is comprised of high performance PCIE SSDs. SRP is an RDMA protocol, that is highly efficient compared to ISCSI. SRP disks appear to the ESXi hosts as local disks. The 56Gb link is formed via Mellanox Connectx-3 cards. They are capable of either 40Gb Ethernet or 56Gb InfiniBand. InfiniBand generally has extremely low latency and low CPU usage when compared to other connectivity methods. The 100Gb adapters are Mellanox Connectx-4 adapters. They are capable of 100Gb ethernet or 100Gb InfiniBand. Unfortunately, there are no InfiniBand drivers available for i at this point. This is why for this test we are pitting 100Gb ISCSI up against 56Gb SRP. 100GB SRP would obviously be my preferred connection method. I have spoken to Mellanox and they have no immediate plans to make a Connectx-4 compatible SRP driver for ESXi. That is unfortunate but it is of course due to demand. In my opinion they have not made the benefits of Infiniband widely known to the VMware community.

56 Gb SRP

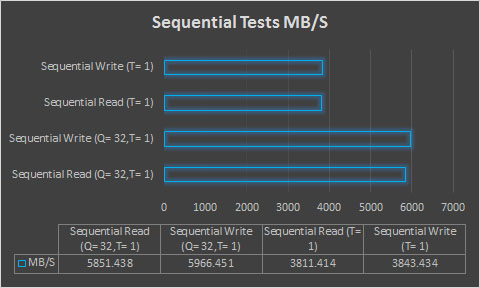

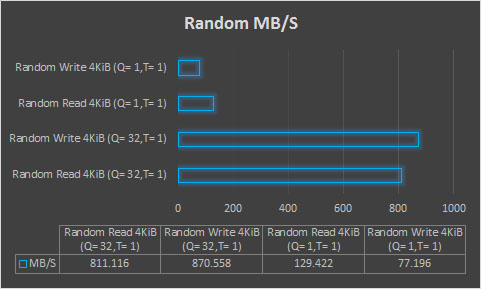

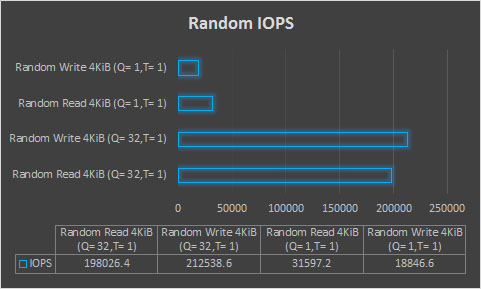

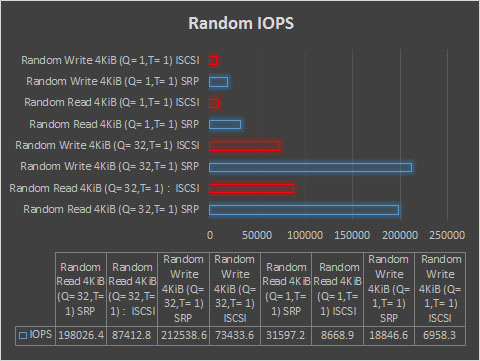

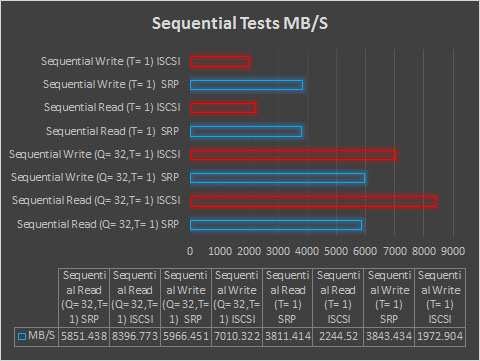

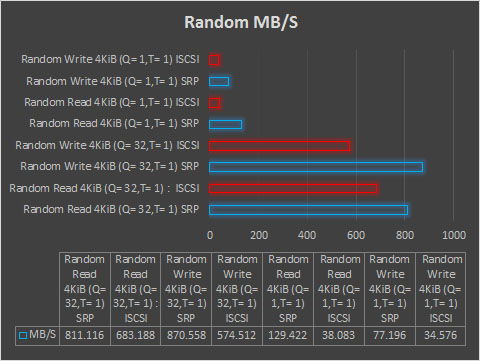

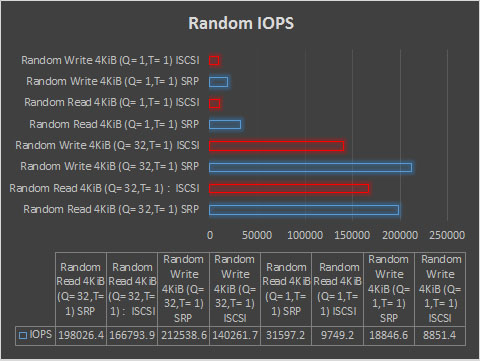

My SRP environment was pre-existing and will be the baseline that the 100Gb results will be compared to. For the purpose of this post I will focus more on 100Gb setup. Here are the results that we hope to beat. Please note the tests are with 1 thread, higher threads tend to increase the results. I tend to strive for the best single guest performance possible. Many people will benchmark 12 or so io initiators at once, but I feel that can mask the rather poor performance of a single VM.

The above results speak for themselves. These tests are being ran from a single Windows 10 VM guest hosted on i. The VM is allotted 2 CPUs and 8gb of ram.

100 Gb Ethernet (ISCSI) - Linux Target

As stated before, there currently is not an option to run the Mellanox Connectx-4 cards in InfiniBand mode on ESXi. The VPI cards that I have can be switched between Ethernet and InfiniBand via a config utility. We will begin the entire process by getting the Connectx-4 card to show up as an Ethernet adapter on the Linux target and configured with an IP address.

1. modprobe mlx5_core This loads the driver for the connectx-4 cards

2. g -d /dev/mst/mt4115_pciconf0 set LINK_TYPE_P1=2 LINK_TYPE_P2=2 This sets both port 1 and port 2 on the card to Ethernet instead of IB

3. reboot

4. ifconfig -a We now see the 2 adapters listed as network adapters, in our case enp4s0f0 and enp4s0f1. For the example I will focus on just the first adapter.

5. ifconfig enp4s0f0 mtu 9000 This enabled jumbo frames. Please note all ifconfig settings do not persist through a reboot. In order to make these perm you would need to edit /etc/network/interfaces

6. ifconfig enp4s0f0 192.168.151.1/24 up Configures the interface with an IP address and enables the interface.

SCST Target

SCST is the target software running on Linux in both the SRP and ISCSI tests. We simply change what driver is being associated with the device. I have already discussed SCST setup in a previous post so I will skip that and just show my SCSI configuration within SCST.

Initial SCS

T.conf:

HANDLER vdisk_fileio {

DEVICE disk01 {

filename /dev/zvol/t/ISCSI.PSC.Net

nv_cache 1

rotational 0

write_through 0

}

}

TARGET_DRIVER iscsi {

enabled 1

TARGET iqn.2006-10.net.vlnb:tgt {

LUN 0 disk01

enabled 1

rel_tgt_id 1

}

}

100 Gb Ethernet (ISCSI) - ESXi Initiator

At this point we are ready to configure ESXi to connect to the Linux target. The Connectx-4 EN driver is included in ESXi6 by default but is also available for ESXi5.5 on Mellanox's website. The driver is only capable of detecting and working with an adapter in Ethernet mode. Unfortunately, this meant that I had to boot the server with a Linux live cd and download the Mellanox utilities and change the adapter to Ethernet mode on both ports. The process for this change is the same as step 2 in the Linux target configuration. I also took the time to update the device firmware while under Linux. Mellanox does sell an Ethernet only Connectx-4 card. That card would likely work immediately since it is not capable of being switched into Infinband mode.

Once this process was completed I rebooted the server Under ESXi and configured a vmkernel port and associated it with the 100GB network interface. I then enabled the ISCSI software adapter and associated it with the vmkernel port I created as you would normally. I was able to connect to the datastore via ISCSI and decided it was time to do some testing.

100 Gb Ethernet Initial Results

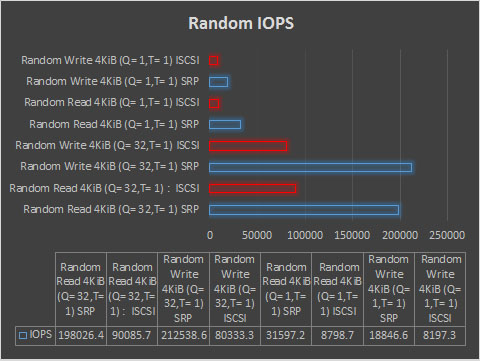

For my test I VMotioned the same windows 10 guest that we used for the SRP benchmark over to the datastore connected via 100Gb ISCSI. Below are the initial results.

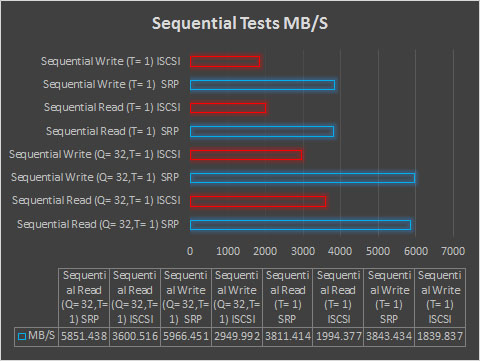

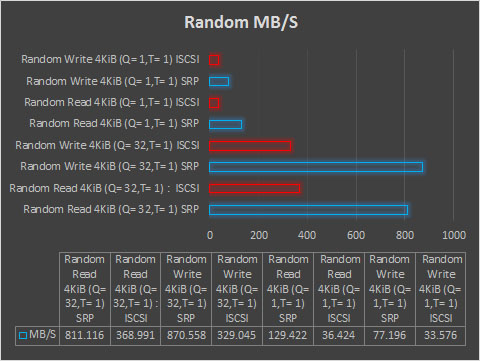

Well, that is one way to waste 3 hours. Fortunately, we are not done here. The initial results were disappointing to say the least. I had assumed ISCSI may lose to SRP in some of the random workloads due to SRP's latency advantage. I was sure that we should be able to beat SRP on sequential workloads considering SRP has half the bandwidth available to it. It is worth mentioning that SRP is hitting basically wire rate on its sequential tests so we cant ask anything more of it. I started searching for tunables that were available in both Linux and ESXi. Changing MaxOutstandingR2T to a value of 8 on both the target and initiator showed a decent improvement in sequential workloads. I then reached out to Bart Van Assche. Bart is a lead contributor to SCST development and he is also currently employed by Sandisk. He suggested that I take a look at configuring the following parameter: node.session.nr_sessions. The idea being that we should be able to allow multiple sessions for a single target/initiator pairing. Unfortunately, I do not believe this is possible on ESXi. That did get me thinking about other ways to accomplish the same thing in ESXi. I ended up creating 5 vmkernel interfaces all associated to the same 100Gb network interface. I then associated all of those vmkernels to the ISCSI adapter and enabled round robin:

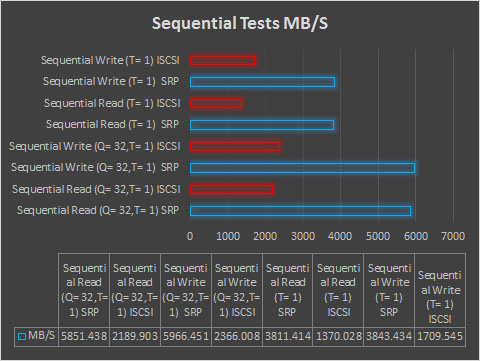

SRP is going out of its way to hide the gains we achieved with that change due to the scale it created on the charts. We can see from the numbers that we went from 2189MBps to 3600MBps in our sequential tests so we are certainly heading in the right direction. By default, VMware's round robin policy shoots 1000 iops out each vmkernel port. Past experience with Dell SAN's has shown me that this should be changed to 1 iop per port.

The process for changing the round robin policy to 1 iop can be found here: Change Round Robin to 1 IOP

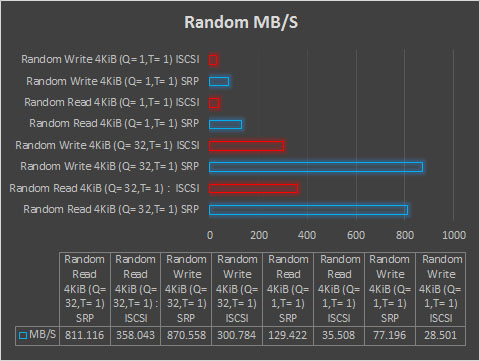

The round robin change had a dramatic impact across the board. We finally beat SRP in a few benchmarks. The sequential read and write tests with a que depth of 32 beat SRP. We can attribute this to the added bandwidth available. All other tests are still won by SRP but we see the gap has been closed substantially. SRP will be uncatchable in those random tests due to its extremely low latency, you just simply can do more IOPS in a given amount of time with SRP. Of note the Connectx-4 ESXi drivers are still in their infancy. Some companies would choose to run 100Gb Ethernet over 56Gb SRP due to the fact that ISCSI is easily routable while SRP would be more of a Rack connectivity solution. I do hope that Mellanox changes their stance on SRP under VMware with the Connectx-4 cards though, the performance is too good to ignore. Mellanox made mention that they will likely release an ISER driver for ESXi. ISER is ISCSI over RDMA, while SRP is SCSI over RDMA. ISER will likely perform worse then SRP but better than ISCSI.

100 Gb Ethernet Next Steps

I am going to continue working on improving the results. Since ISCSI is somewhat CPU intensive there is likely some extra performance to be had by tweaking the CPU assignments on the target. It is often possible to gain performance by manually assigning certain processes and interupts to specific CPUs.